IntroductionsĪ robust data engineering framework cannot be deployed without using a sophisticated workflow management tool, I was using Pentaho Kettle extensively for large-scale deployments for a significant period of my career. In the second section, we shall study the 10 different branching strategies that Airflow provides to build complex data pipelines. The objective of this post is to explore a few obvious challenges of designing and deploying data engineering pipelines with a specific focus on trigger rules of Apache Airflow 2.0.

I thank Marc Lamberti for his guide to Apache Airflow, this post is just an attempt to complete what he had started in his blog. Image Credit: ETL Pipeline with Airflow, Spark, s3, MongoDB and Amazon Redshift.

#TRIGGER AIRFLOW DAG CODE#

Source code for all the dags explained in this post can be found in this repo This post falls under a new topic Data Engineering(at scale). In this post, we shall explore the challenges involved in managing data, people issues, conventional approaches that can be improved without much effort and a focus on Trigger rules of Apache Airflow. Building in-house data-pipelines, using Pentaho Kettle at enterprise scale to enjoying the flexibility of Apache Airflow is one of the most significant parts of my data journey. To understand the value of an integration platform or a workflow management system - one should strive for excellence in maintaining and serving reliable data at large scale. I argued that those data pipeline processes can easily built in-house rather than depending on an external product. I was so ignorant and questioned, 'why would someone pay so much for a piece of code that connects systems and schedules events'.

#TRIGGER AIRFLOW DAG DRIVER#

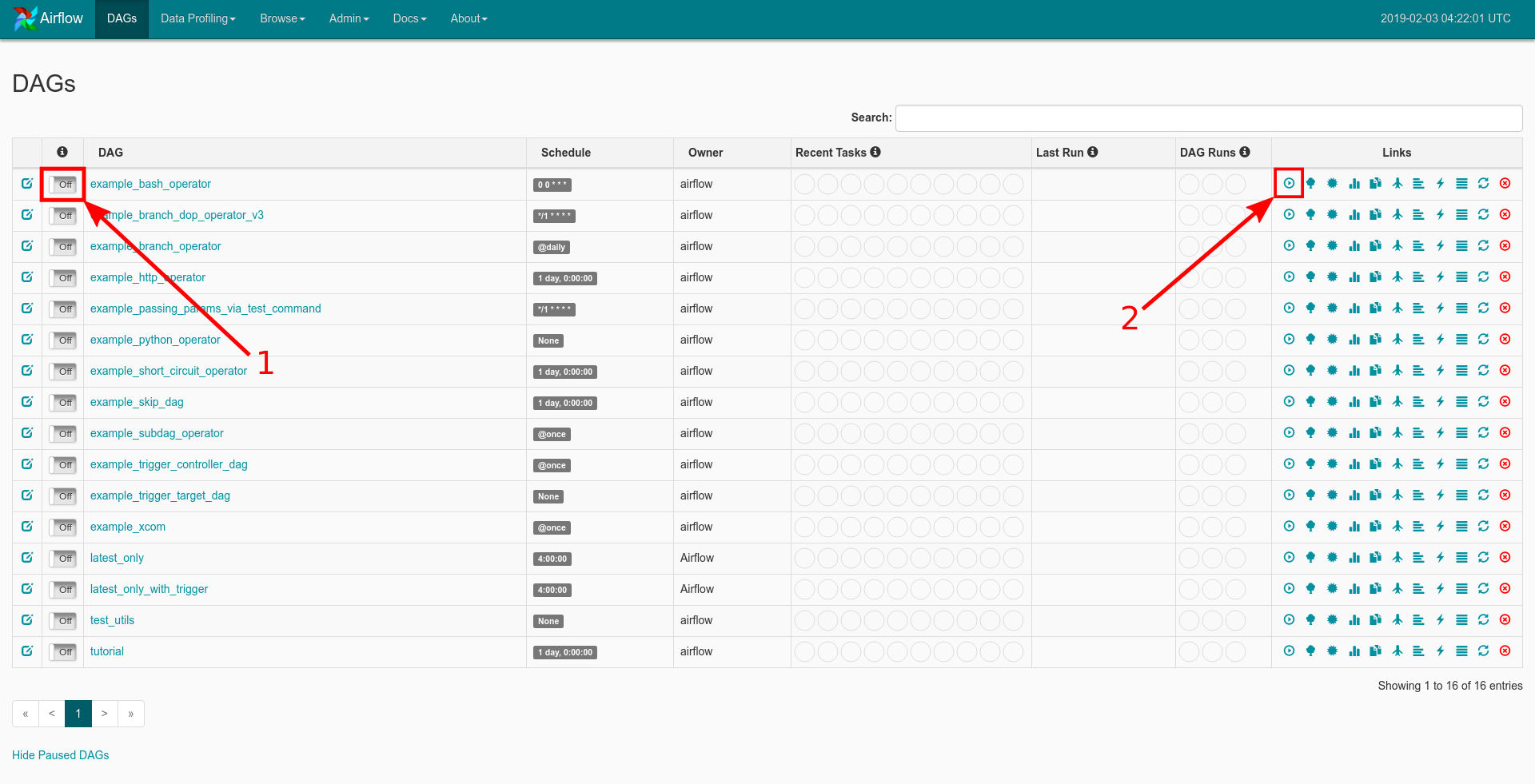

This operator waits for a specific external task in another DAY to complete before proceeding.Airflow Trigger Rules for Building Complex Data Pipelines Explained, and My Initial Days of Airflow Selection and Experienceĭell acquiring Boomi(circa 2010) was a big topic of discussion among my peers then, I was just start shifting my career from developing system software, device driver development to building distributed IT products at enterprise scale. To make sure that each task triggers an external BAG and waits for its completion before moving to the next task, you can use the ExternalTaskSensor operator. Trigger_operator > previous_task > wait_task > task Start_date=datetime(2023, 1, = TriggerDagRunOperator( But wait_task never triggers task_2 in DAG_A to run. DAG_B is TEST_DAG which has the task that must be completed before task_2 in DAG_A will start. I tried the following, but when wait_task starts, it stays running and doesn't trigger task_2 in DAG_A. Start_date=datetime(2023, 1, ex_func_airflow(i): from airflow import DAGįrom import PythonOperator To create the tasks, here is my current solution. It should wait for the last task in DAG_B to succeed before trigger the next task in DAG_A. DAG_A should trigger DAG_B to start, once all tasks in DAG_B are complete, then the next task in DAG_A should start. The idea is that each task should trigger an external dag. Task_1 > task_2 > task_3 based on the list. I would like to create tasks based on a list.

0 kommentar(er)

0 kommentar(er)